If you have managed Google Ads recently, you have likely felt the shift. Spend surges without structural changes. Performance degrades immediately after a “successful” optimization. Accounts go through recovery periods that feel suspiciously like retraining windows.

These behaviors are often attributed to platform opacity, bugs, or aggressive competition. That explanation is comforting, but it is wrong.

The issue is not that paid search has become less controllable. It is that the system being controlled is no longer deterministic. We are still teaching paid search as if it were a linear input-output machine: define keywords, set bids, and observe results.

That world no longer exists. Modern Google Ads is a Reinforcement Learning (RL) system operating under partial observability and delayed rewards. It does not merely execute instructions; it learns a policy based on the signals we emit.

To win in this environment, we have to stop acting like operators pushing buttons and start thinking like system designers training a model.

1. The Mental Model is Broken

The classical mental model of paid search assumes a stable mapping between inputs and outputs. You change bidding strategy, and performance changes predictably. You add a keyword, and intent is captured cleanly.

Today, however, the interface controls—bids, keywords, targets—are no longer instructions executed verbatim. They are inputs into a learning process. Google Ads behaves like a probabilistic system that continuously updates its internal decision-making logic based on observed outcomes.

This creates asymmetric risk: the same system that can scale performance can also entrench failure modes silently. If you don’t understand how the machine learns, you are likely teaching it to fail.

2. Formalizing the System: The Agent and The Environment

To reason correctly about strategy, we need to map paid search to the correct abstraction: Reinforcement Learning.

● The Agent: This is Google’s internal bidding, matching, and ranking models.

● The Environment: The auction marketplace (users, competitors, inventory, time).

● The State (Hidden): A high-dimensional representation including query

embeddings, user vectors, and contextual signals. Crucially, advertisers never see this state directly.

● The Reward: The conversions and conversion values you define.

● The Policy: The agent’s continuously updated mapping from state to action, optimized for long-term value.

This framing resolves a key misconception. Advertisers do not control the policy. We shape the reward signal that trains it.

3. Reward Shaping: You Get Exactly What You Ask For

In reinforcement learning, the agent optimizes the reward function with brutal obedience. If your reward signal (conversion tracking) is misaligned with your business reality, the system will still “succeed”, just not for you.

The Danger of Binary Rewards

Most advertisers rely on binary conversion events (e.g., “Lead Submitted”). From a Machine Learning perspective, this is a low-bandwidth signal. A binary signal collapses complex multi-dimensional outcomes into a single bit. If you optimize for “form fills,” the policy will seek segments that fill forms easilynot necessarily buyers. You didn’t optimize CPA; you created a reward that was easy to harvest.

Label Noise as Model Corruption

We often treat tracking errors, duplicates, or spam leads as “reporting annoyances.” In a learning system, this is label noise.

● Duplicate conversions

● Spam/Bot inflation

● Broken attribution

These don’t just mess up your Excel sheet; they train the model on corrupted feedback. Tracking quality is not analytics hygiene. It is policy training data integrity.

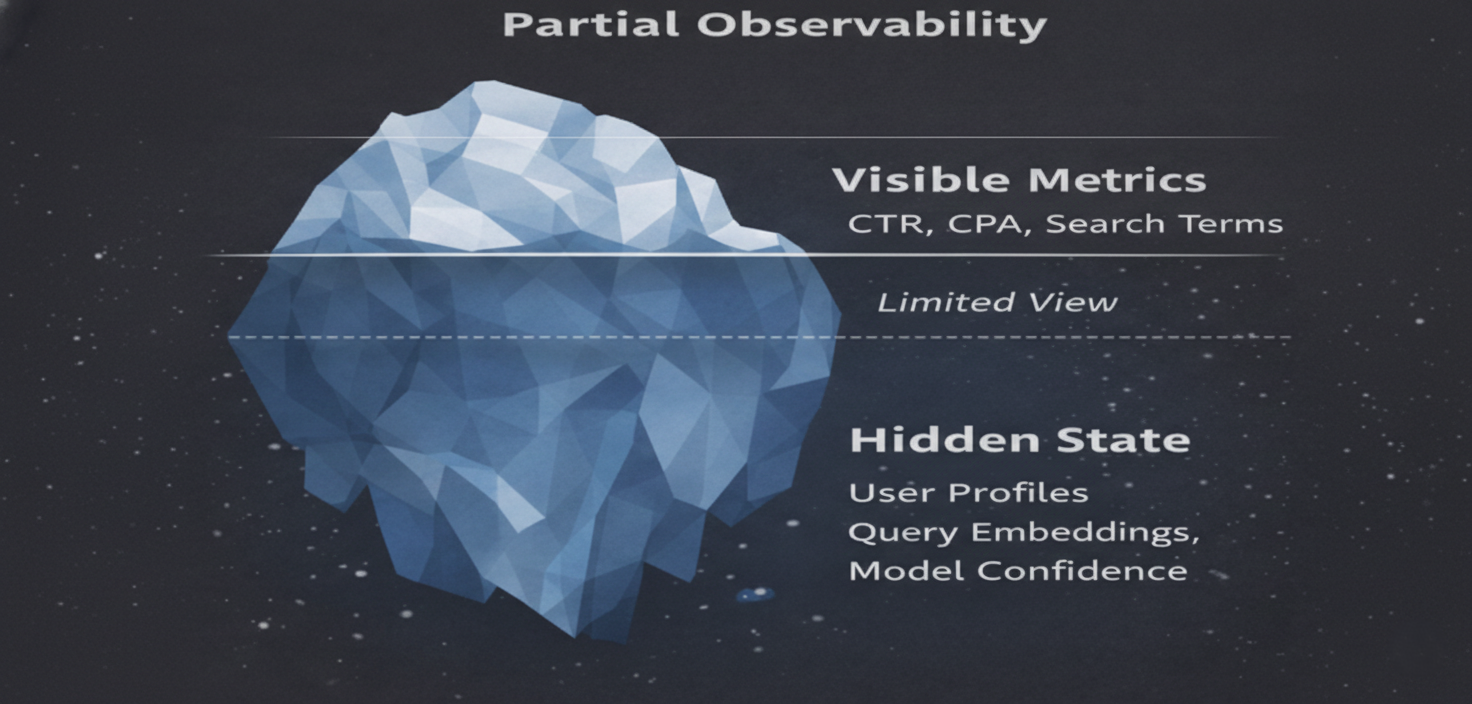

4. The Iceberg: Operating Under Partial Observability

In RL, the “State” is what the agent uses to decide actions. In paid search, the advertiser does not have access to the true state.

We see a “dashboard view”: CTR, CPA, Search Terms, and Quality Score. However, the machine is making decisions based on user profiles, vector embeddings, and model confidence estimates.

This leads to Proxy Worship. Advertisers optimize what they can see (CTR, Asset Grades), not what matters. But you can easily improve a proxy metric while degrading the actual policy.

The Interference Loop

Because we can’t see the true state, we micromanage. Constant bid adjustments, weekly structural rebuilds, and aggressive pruning prevent the system from converging. Every manual intervention changes the data distribution the policy is learning from. This creates an operator-induced oscillation: interference produces instability, which triggers more interference.

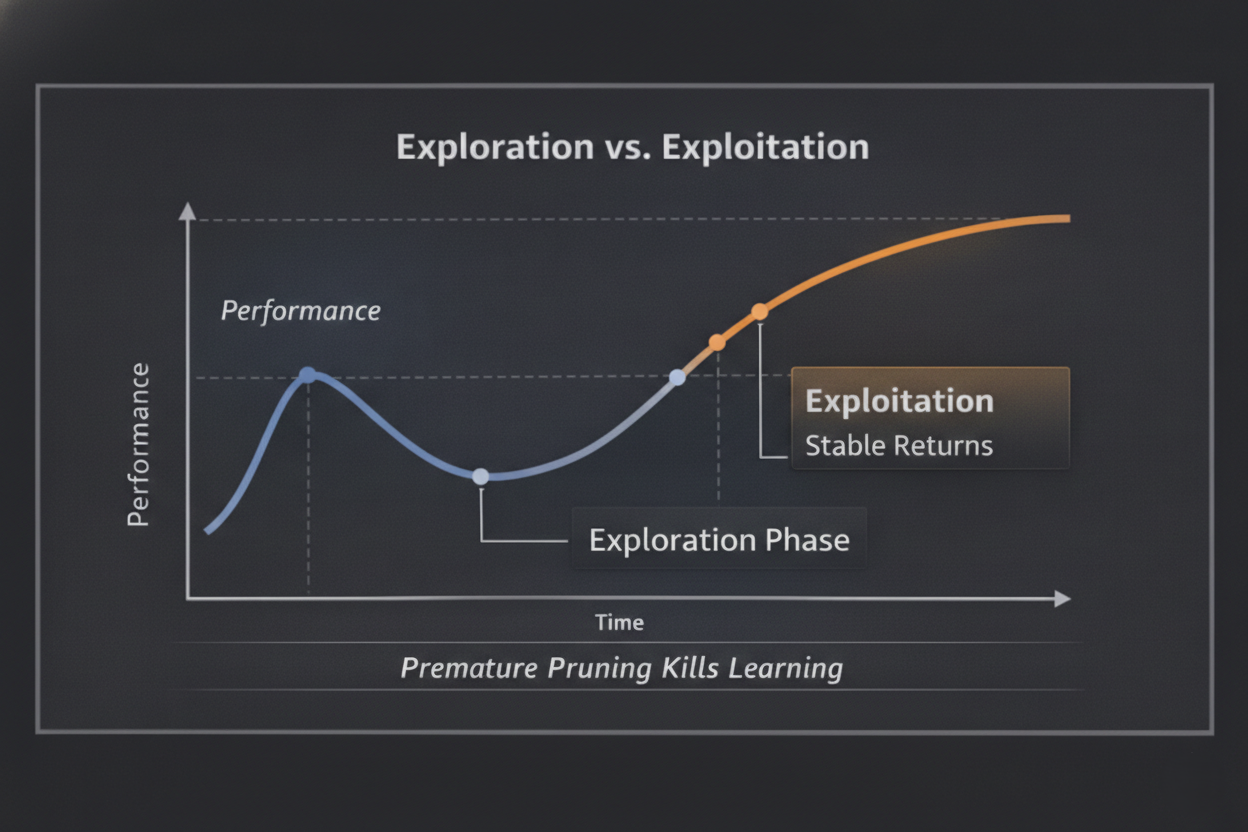

5. Exploration vs. Exploitation

Broad Match and Performance Max (PMax) are often discussed as “features.” They are actually policy learning accelerators.

● Broad Match expands the action space to explore semantically adjacent queries.

● PMax unifies decision layers to optimize against a composite reward.

These tools enable the system to balance Exploration (testing uncertain actions to learn) and Exploitation (doubling down on what works).

The friction arises because advertisers hate exploration. It looks like volatility and “wasted” spend. But without exploration, the policy cannot adapt to new intent patterns. When you restrict match types or aggressively add negatives, you “collapse the state-action coverage,” limiting channel intelligence.

6. Architecture as System Design

Once you accept that paid search is an RL system, account structure stops being about organization and starts being about signal concentration.

Granularity is a cost. Every campaign or asset group creates a partition in the data. Too many partitions starve the policy of signal.

● Structure changes are policy resets. Splitting a campaign or changing a bid strategy isn’t just an edit; it changes the training distribution.

● Budget is a training constraint. Budget isn’t just money; it’s learning bandwidth. Tight budgets create conservative policies that miss upside.

The Strategic Divide

The industry is splitting into two camps: Operators and System Designers.

Operators react to surface metrics, fight the platform, and chase short-term efficiency. System Designers reason about incentives, shape rewards, design for learning velocity, and diagnose before intervening.

You are not managing Google Ads anymore. You are participating in a learning system that infers intent probabilistically and adapts to a changing environment. The system is learning regardless. The only question is whether it is learning what you actually want.

How We Can Help You Transition

If your current strategy relies on fighting the machine rather than training it, we should talk. We specialize in designing the reward architectures and account structures that turn reinforcement learning from a volatility risk into a competitive moat.